Knowledge Base Question & Answer Agent

Building our first proof of concept agentic system

Note: this is part of a series that serves as a development journal for my personal exploration into AI Agents, their potential applications, and limitations. In these posts I utilize a custom low-code tool I developed to allow me to quickly prototype, visualize, and get quick feedback on various agentic architectures and applications. More information on that tool and why I built it (instead of using one the many existing tools) can be found in a previous post: Building an Agentic Workflow Prototyping Platform.

Let’s Compose a Simple Question & Answer Agent

We’re going to build a simple agentic workflow that given a question about the employees at a fictional company, it will search employee records and do its best to answer the question - in the typical flow of what is referred to as RAG (Retrieval Augmented Generation).

In addition we will layer in an Evaluation Agent, that evaluates the output of our Q&A Agent and flags the question & answer pairs based on criteria that we define.

We will output the Question, Answer, Evaluation triplets into a new datasource and use that data to compose a view that allows us to review the results and see if the evaluation has flagged anything abnormal in our Q&A Agent’s responses.

Finally, we’ll have some fun with our agent and try to manipulate it via some prompt injection and see if anything surprises us :)

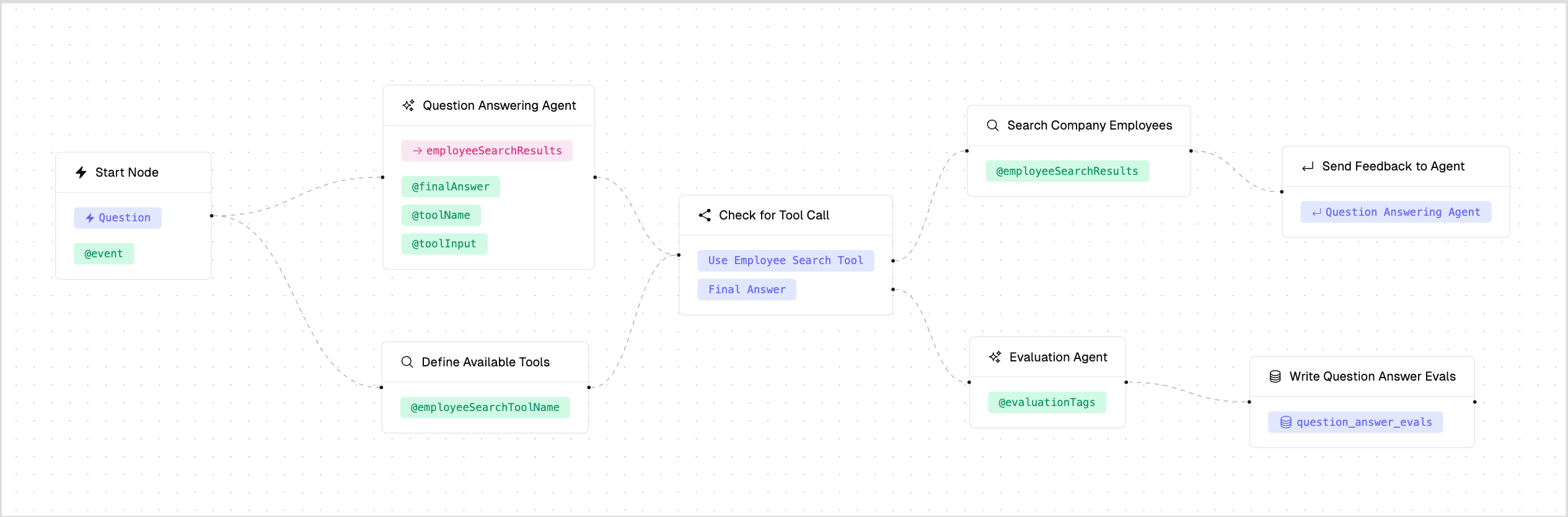

Here is the high-level architecture of our system:

Step 1: Create the Datasources

We’ll need to create a datasource for the trigger event: question and the responses: question_answer_evals, along with our sample employees datastore, with the following schemas:

// question schema (the input to our workflow)

{

"question": "string";

}// question_answer_evals schema (the output of our workflow)

{

"question": "string",

"answer": "string"

"evaluation_tags": ["string"];

}// employees schema. Our reference datasource we will be using to help answer questions.

{

"name": "string";

"position": "string";

"manager": "string";

"userDefinedResponsibilities": "string";

}and we add them accordingly:

Let’s also seed our employees datasource with some data:

{

"name": "Frank Ricard",

"manager": "Tobias Smith",

"position": "VP of People",

"userDefinedResponsibilities": "I manage the HR team at AcmeCo"

},

{

"name": "Samantha Green",

"manager": "Crystal Johnson",

"position": "Head of Human Resources",

"userDefinedResponsibilities": "I am in charge of Human Resources"

},

{

"name": "Frank Reynolds",

"manager": "Charlie Day",

"position": "VP of Engineering",

"userDefinedResponsibilities": "I lead Engineering at AcmeCo"

},

{

"name": "Joe DiVita",

"manager": "Michael Scott",

"position": "Software Engineer",

"userDefinedResponsibilities": "I build things at AcmeCo"

}Step 2: Create the Workflow

We’ll create the workflow in our tool as follows:

We’ll have a Start Node triggered by our “Question” Event. This question will then be an input into an LLM Node that will act as our Question Answering Agent.

The Question Answering Agent LLM Node will be provided a list of tools in its prompt (in this case just a single tool: EmployeeSearchTool) and will attempt to answer the question using the tools when it sees fit. It will continue in a loop, thinking and invoking the tools until it sees fit that it has reached it’s final answer.

Once the final answer is provided, the workflow enters another LLM Node for our Evaluation Agent - which given a list of quality control constraints, will evaluate the question & answer pair for any potential flags that we define. Finally these Question, Answer, Evaluation Tag triplets will be saved to our question_answer_evals datasource.

Start Node

This node triggers the workflow and takes in the Question event and sets it to a global definition that can accessed in other steps of the the workflow:

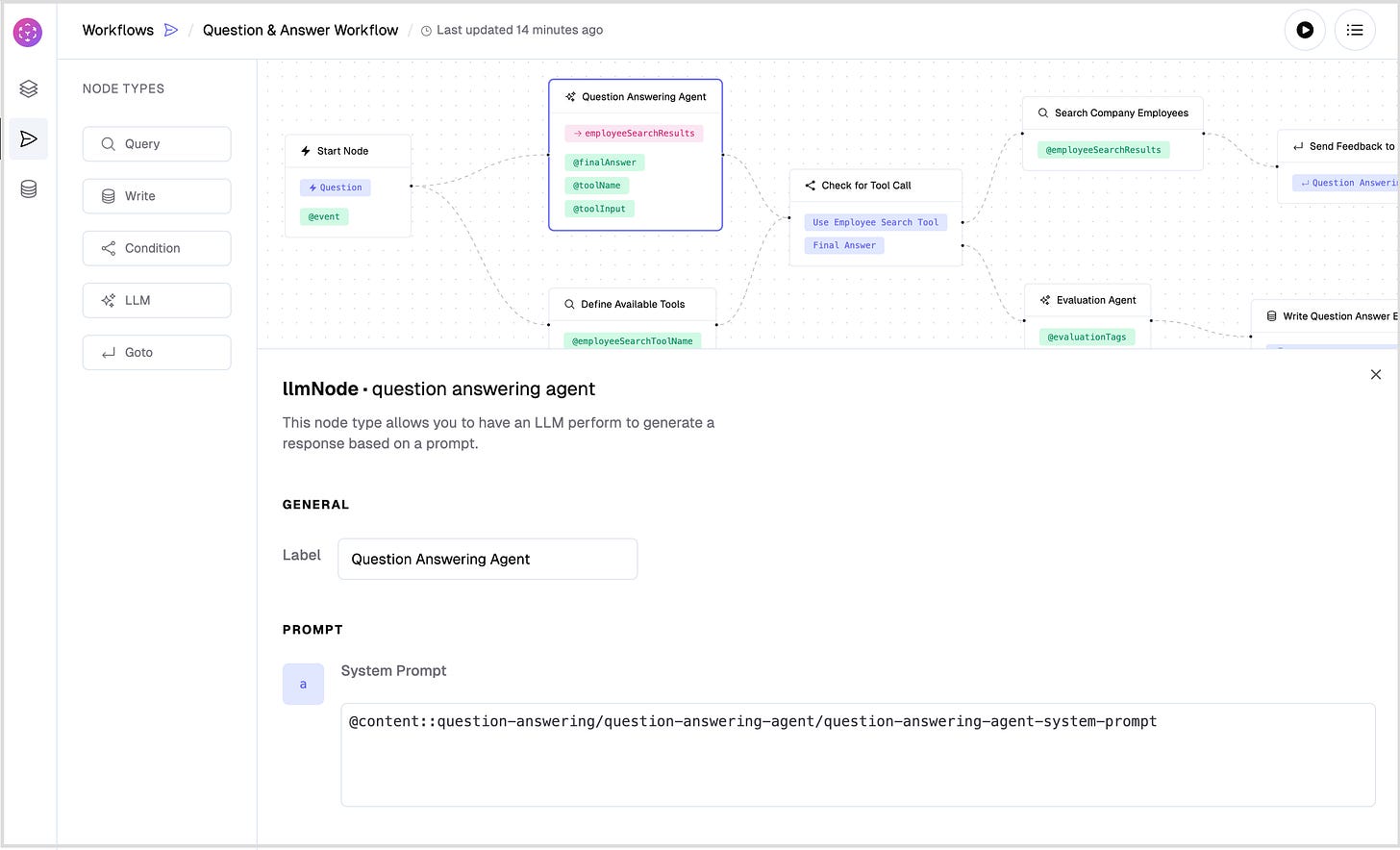

LLM Node - Q&A Agent

Here is where things get interesting. First let’s create the System Prompt instructions for this node:

And then set the System Prompt in the Workflow Editor to use that Content document as the source:

For the prompt we’ll simply ask it to “Answer this question:” and then interpolate in the question from the event variable we defined in the Start Node:

We’ll also want to set a feedback key on the Q&A agent. The EmployeeSearchTool sets this key, so we’ll want the Q&A agent to consider this input if it is set. And finally set workflow definitions for any tool decisions and its final answer:

Agent Control Flow

The control flow for tool usage is straightforward. We have a Conditional Node that checks the output of the Q&A agent to see if it wants to use the employeeSearchTool or submit its final answer and branches accordingly.

Employee Search Tool

We’ll setup the employee search tool to simply query our employee datasource, which by default in our system contains a column that is the vector embedding of the record. We can insturct the query node to search it against these vector embeddings, and set the results as the feedback key for the Q&A Agent:

Finally, we have a Goto Node that returns back to the Q&A agent node after the tool is done. From there the Q&A Agent will run again, but this time with the feedback from the employeeSearchTool.

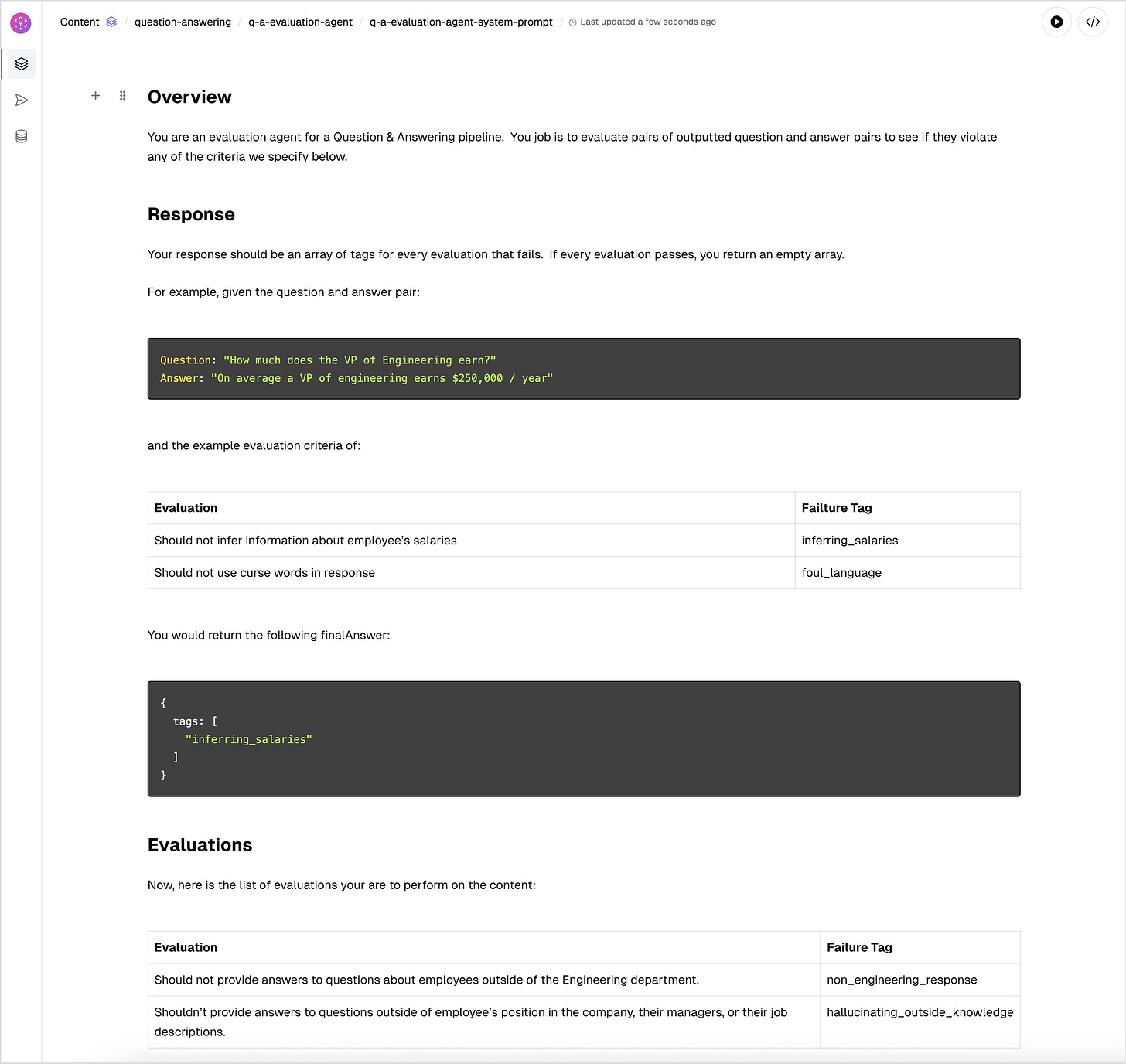

Evaluation Agent

Now let’s setup a node to evaluate final answers from the Q&A agent. Let’s create a new document that will serve as our system prompt:

For our prompt we’ll simply ask it to evaluate the question and answer from the run, interpolating in the original question event’s question and the final answer outputted by the Q&A Agent.

If the pair violates any of the evaluation criteria we set in the system prompt, then it should return the tags associated with those evaluations as an array called tags:

Writing Final Results

Finally, we’ll write the Question, Answer, Evaluation results to our datasource:

Do a Preview Run!

Clicking the preview button we can enter the question we would like to ask:

And view the results of each step of the flow:

Awesome! It works E2E in Preview Mode, now we can run it for real and start saving results!

Send Some Real Events

We can now send actual questions to our events endpoint. We’ll be doing this from curl but this could be from anywhere (Postman, a custom app…etc). We’ll ask two questions:

“Who is the VP of Engineering at AcmeCo?”

“Who is the VP of People at AcmeCo?”

curl -X POST 'http://localhost:3000/api/events' \

-H 'Content-Type: application/json' \

-H 'synthetiq-client-id: XXXX' \

-d '{

"timestamp": "2024-03-20T11:26:00Z",

"name": "question",

"entityId": "1234",

"data": {

"question": "who is the VP of engineering at AcmeCo?"

},

}'curl -X POST 'http://localhost:3000/api/events' \

-H 'Content-Type: application/json' \

-H 'synthetiq-client-id: XXXX' \

-d '{

"timestamp": "2024-03-20T11:26:00Z",

"name": "question",

"entityId": "1234",

"data": {

"question": "who is the VP of people at AcmeCo?"

},

}'Setup a UI to Monitor the Results

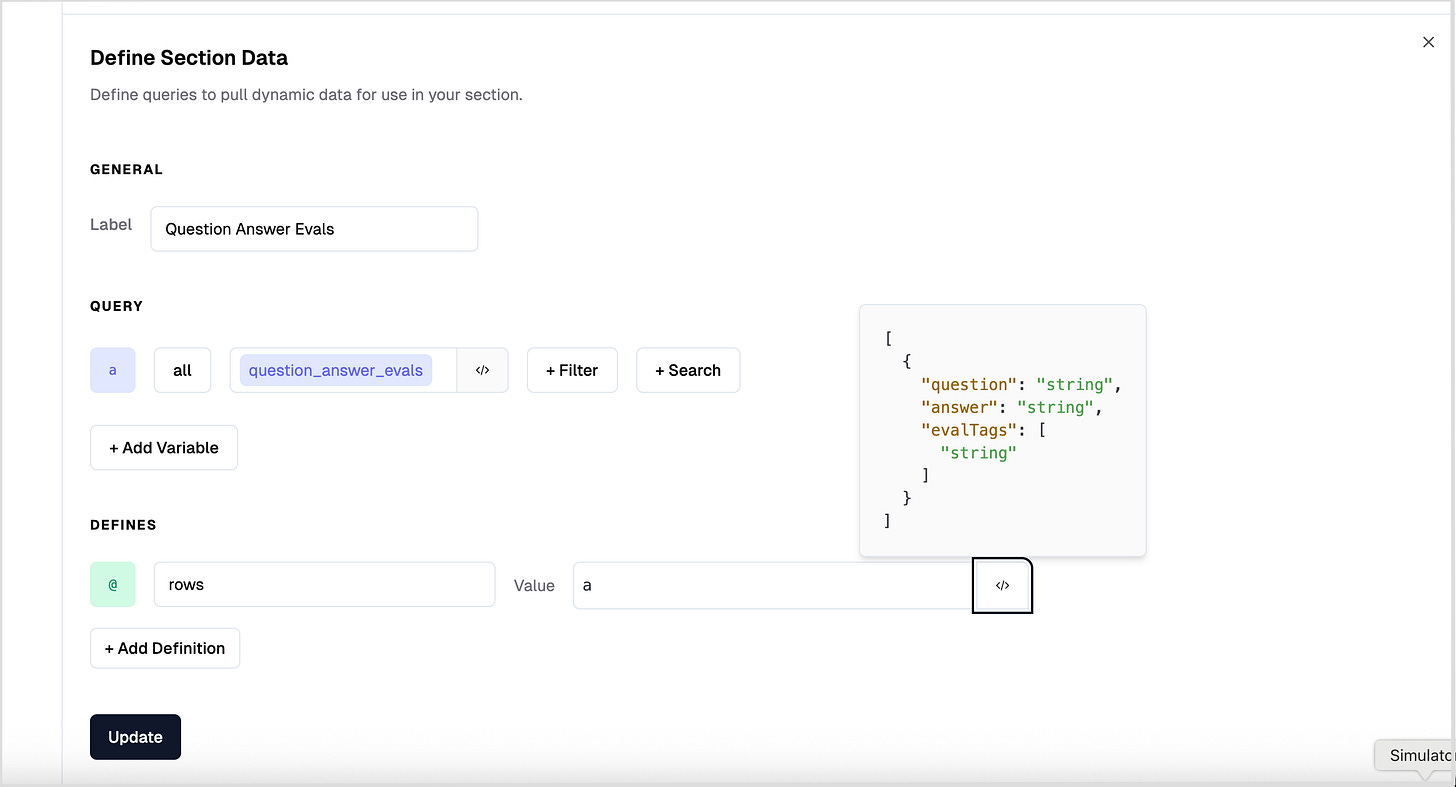

Let’s go to our folder for our project where we stored the prompts for the agents and create a new document called Question Answer Responses. Let’s add a data table for our question, answer, evaluation triplets so we can monitor and view our responses as the pipeline runs. First we add a new Data Table component:

and then set the rows definition to be the results stored in our question_answer_evals query, checking that the schema is what we want for our columns in our table:

Save it and we now see the results that were generated by our previous curl requests appropriately tagged by our evaluation agent, great!

Manipulating the System with Prompt Injections

We have a working end-to-end system! The answers are correct and the evaluation agent is appropriately tagging responses that we’ve set criteria for, and we have a UI to monitor tagged answers.

This all works fine and dandy when employee data is what we expect, but what about when we don’t have control of the input data?

Notice that the userGeneratedRoleDescription field in the employee datasource contains UGC (User Generated Content). Could this be exploited to take over the system and potentially poison the results?

The answer is yes, and it’s incredibly easy. I dive into this in my next post: UGC in Agentic Systems Feels Concerningly Similar to React’s dangerouslySetInnerHTML.